Guest post: Paul Gugliuzza is a Professor of Law at Temple University Beasley School of Law, Jordana R. Goodman is an Assistant Professor of Law at Chicago-Kent College of Law and an innovator in residence at the Massachusetts Institute of Technology, and Rachel Rebouché is the Dean and the Peter J. Liacouras Professor of Law at Temple University Beasley School of Law.

This post is part of a series by the Diversity Pilots Initiative, which advances inclusive innovation through rigorous research. The first blog in the series is here, and resources from the first conference of the initiative are available here.

The ongoing reckonings with systemic racism and sexism in the United States might seem, on first glance, to have little to do with patent law. Yet scholarship on racial and gender inequality in the patent system is growing. Recent research has, for example, shown that women and people of color are underrepresented among patent-seeking inventors and among lawyers and agents at the PTO. In addition, scholars have explored racist and sexist norms baked into the content of patent law itself.

In a new article, we empirically examine racial and gender inequality in what is perhaps the highest-stakes area of patent law practice: appellate oral argument at the Federal Circuit.

Unlike many prior studies of inequality in the patent system, which look at race or gender in isolation, our article looks at race and gender in combination. The intersectional approach we deploy leads to several new insights that, we think, highlight the importance of getting beyond “single-axis categorizations of identity”—a point Kimberlé Crenshaw made when introducing the concept of intersectionality three decades ago.

The dataset we hand built and hand coded for our study includes information about the race and gender of over 2,500 attorneys who presented oral argument in a Federal Circuit patent case from 2010 through 2019—roughly 6,000 arguments in total. Our dataset is unique not only because it contains information about both race and gender but also because it includes information about case outcomes, which allows us to assess whether certain cohorts of attorneys win or lose more frequently at the Federal Circuit.

Perhaps unsurprisingly, we find that the bar arguing patent appeals at the Federal Circuit is overwhelmingly white, male, and white + male, as indicated below, which break down, in a variety of ways, the gender and race of the lawyers who argued Federal Circuit patent cases during the decade covered by our study. (Note that the figures report the total number of arguments delivered by lawyers in each demographic category. Note also that the number of arguments we were able to code for the race of the arguing lawyer was slightly smaller than the number of arguments we were able to code for the gender of the arguing lawyer, so the total number of arguments reported on the figures differ slightly.)

Federal Circuit Patent Case Oral Arguments, 2010-2019

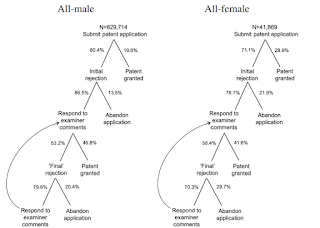

What is surprising, however, is that the racial and gender disparities illustrated above dwindle when we look only at arguments by lawyers appearing on behalf of the government, as shown below, which limit our data only to arguments by government lawyers. (About 75% of those government arguments were by lawyers from the PTO Solicitor’s Office; the others came from a variety of agencies, including the ITC and various components of the DOJ.)